Next: Robust Design Up: Types of analysis Previous: Sensitivity Contents

An optimization calculation usually consists of the minimization (or maximization) of one objective subject to one or more constraints. To that end the sensitivity of the objective is modified to take the constraint sensitivities into account leading to one combined sensitivity. This process takes place in a *FEASIBLE DIRECTION procedure. The ensuing sensitivity determines where the structure has to be thickened or thinned in order to reach a minimum of the objective function while not violating any constraint. Constraints can be taken into account by using the Gradient Projection Method or the Gradient Descent Method.

In the Gradient Projection Method the constraints are taken into account by projecting the unconstrained

sensitivities on the complement of the subspace consisting of the normals on

the active constraint hyperplanes. Suppose

the domain is n-dimensional (n design variables) and the subspace has the

dimension m (m constraints). Then the sensitivities of the constraints can

be arranged as basis vectors (the normal directions on the hyperplanes) in a

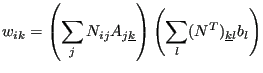

![]() matrix. The projection p of a vector b

on the subspace satisfies the orthogonality condition:

matrix. The projection p of a vector b

on the subspace satisfies the orthogonality condition:

| (815) |

Since p belongs to the subspace it can be written as a linear combination of

the basis vectors ![]() , where

, where ![]() is a

is a

![]() vector of

coefficients. Consequently:

vector of

coefficients. Consequently:

| (816) |

from which ![]() can be solved yielding:

can be solved yielding:

| (817) |

The complement of the projection vector is

![]() . Denoting

. Denoting

![]() , the constrained sensitivies

, the constrained sensitivies ![]() are obtained from the

unconstrained sensitivities

are obtained from the

unconstrained sensitivities ![]() by:

by:

| (818) |

or, in component notation:

| (819) |

where

|

(820) |

(no summation over k in the last equation).

Active constraints are constraints which

To this end the algorithm starts with all constraints which are fulfilled and removes the constraints one-by-one for which the Lagrange multiplier, satisfying

| (821) |

points to the feasible part of the space.

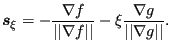

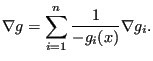

The Gradient Descent Method is an interior point method which means that the starting point for an optimization has to lie in the feasible domain. The influence of the constraints on the feasible direction is always taken into account independent of the distance to the constraint bound. This methods aims to stay away from the constraint boundaries as long as possible on the way to the local optimum. The feasible direction is calculated with the following formulas (the detailed derivation can be found in [18]):

|

(822) |

Here, ![]() is the gradient of the objective function,

is the gradient of the objective function, ![]() is the

gradient of the constraint and

is the

gradient of the constraint and ![]() is a value between 0 and 1 defining

the weight of the constraint gradient on the descent direction. Choosing a

value for

is a value between 0 and 1 defining

the weight of the constraint gradient on the descent direction. Choosing a

value for ![]() between 0.9 and 1.0 leads to meaningful results. By subtracting a

portion of the gradient of the constraint one tends to stay away from

the constraint boundary (the constraint becomes more negative). In case more

than one constraint is defined, the assembly of the gradient vector of all

constraint functions

between 0.9 and 1.0 leads to meaningful results. By subtracting a

portion of the gradient of the constraint one tends to stay away from

the constraint boundary (the constraint becomes more negative). In case more

than one constraint is defined, the assembly of the gradient vector of all

constraint functions ![]() is done using the logarithmic Barrier function:

is done using the logarithmic Barrier function:

|

(823) |